Quest’s ‘Body Tracking’ API Provides Only a Legless Estimate

The Quest ‘Body Tracking’ API only gives a legless estimate and not necessarily ‘organic’ avatar legs.

Meta released the Body Tracking API as part of its Movement SDK on Thursday last week. Also part of this release was the Eye Tracking API and Face Tracking API for the QuestPro headset.

The Movement SDK is here! Now you can use body tracking, face tracking, and eye tracking to bring a user’s physical movements into the metaverse and enhance social experiences ➡️ https://t.co/2sSevy6mF6 pic.twitter.com/aqtgnXbHl3

— Oculus Developers (@Oculus_Dev) October 21, 2022

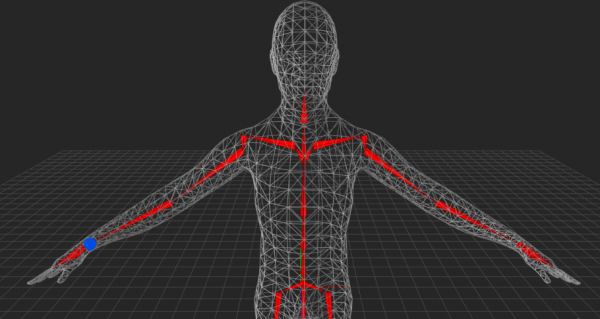

The release was announced by the official Oculus Developers Twitter account which posted it alongside an illustration from the documentation that shows the tracking of a user’s full body pose. The announcement was shared widely which led to the false belief that Quest had finally gotten body-tracking support. However, UploadVR reports that the name ‘body tracking’ and the illustration that accompanied the announcement are both misleading.

With Meta’s Hand Tracking API, you get the actual position of the user’s hands and fingers as tracked by the outward-facing cameras. The Eye Tracking and Face Tracking API give the user’s actual gaze direction as well as the facial muscles movements as tracked by the inward-looking cameras on Quest Pro. However, Quest’s “Body Tracking” API only gives a “simulated upper-body skeleton” according to the user’s head and positions, according to a Meta spokesperson. It is, therefore, not actual tacking and does not cover your legs.

The Body Tracking API, therefore, provides a Body Pose Estimation. According to a Meta spokesperson, the API technology is a combination of machine learning (ML) and inverse kinematics (IK).

Inverse kinematics describes a class of equations used to estimate the motion of a robot to get to the desired destination. They can also be used to estimate the unknown parts of a skeleton according to the already known positions. It is these kinematic questions that are used in powering all the full-body virtual reality avatars in today’s apps. When designing apps, developers don’t necessarily have to implement or understand the complex mathematics upon which inverse kinematics is based. This is because the main game engines such as Unreal and Unity have inverse kinematics built into them. Besides, there are also packages such as Final IK, an IK module that provides developers with fully fleshed-out implementations of IK for a very affordable price.

However, inverse kinematics for virtual reality is usually inaccurate unless one is using body tracking hardware like HTC’s Vive Trackers. Without the hardware, there are just too many possible solutions for a given set of hand and head positions.

Meta is pitching its ‘Body Tracking’ API as a machine-learning model that can generate a more accurate body pose at no cost. The demo video of the API suggests just as much, although minus the lower half of the body. The API support is also limited to Quest headsets which is likely to put off many developers.

At the Meta Connect 2022 event, the company hinted that legs will be incorporated in the future. This is also supported by the company’s own research.

Meta’s Body Tracking Product Manager Vibhor Saxena told developers that new body-tracking improvements in the coming years will be made available via the same app, enabling developers to get access to the “best body tracking technology” without switching to a different interface. Saxena says Meta is working “to make body tracking much better” in the coming years.

At the main keynote at the Meta Connect 2022, Zuckerberg stated that Meta Avatars will soon get legs although a demonstration of the avatars with legs was not made in virtual reality. Legs on avatars are coming to Horizon Worlds before the end of the year with the SDK for other Meta apps set to launch in 2023. Saxena also confirmed that Meta’s Body Tracking API uses the same underlying technology that also powers Meta Avatars. This suggests that the API will also get legs.

The Body Tracking API (which consists of an estimate based on the positions of the head and hands) is a product of a Meta research that the company showcased last month. The research leverages recent machine learning advances.

The system that Meta showed off is not 100% accurate and it has 160ms latency which is more than 11 frames at 72Hz. This is very slow timing that also gives imperfect output so don’t expect to look down and see your legs in the position you expect them to be in. Such latency does not give you a fully immersive and convincing experience although Meta is contemplating other uses cases for less than perfect avatar legs.

Meta’s CTO has made comments implying that the tech can, instead, be used to show legs on other people’s avatars due to the “disconcerting effect” of having legs on your own avatar that aren’t matching your real legs. However, putting legs on other people that third parties can see creates a wholesome experience of avatars with legs that don’t bother anyone looking at it.

Meta says that it is thus working on legs that will look more natural to bystanders as they have no idea how the real legs are actually positioned.

Meta’s upcoming legs for avatars solution won’t necessarily be of the same quality as its research on the functionality. Machine learning papers are often powered by powerful PC GPUs with a very high framerate. The research paper does not provide any detail on the system’s runtime performance.

https://virtualrealitytimes.com/2022/10/24/quests-body-tracking-api-provides-only-a-legless-estimate/https://virtualrealitytimes.com/wp-content/uploads/2022/10/Body-Tracking-API-Upper-Body-600x319.pnghttps://virtualrealitytimes.com/wp-content/uploads/2022/10/Body-Tracking-API-Upper-Body-150x90.pngTechnologyThe Quest ‘Body Tracking’ API only gives a legless estimate and not necessarily ‘organic’ avatar legs. Meta released the Body Tracking API as part of its Movement SDK on Thursday last week. Also part of this release was the Eye Tracking API and Face Tracking API for the QuestPro headset. https://twitter.com/Oculus_Dev/status/1583540088549494785 The...Rob GrantRob Grant[email protected]AuthorVirtual Reality Times - Metaverse & VR