Holograpgic Display Maker VividQ Partners With Waveguide Maker Dispelix for New 3D Holographic Imagery Technology

Holographic display technology manufacturer VividQ has partnered with the waveguide designer Dispelix to develop a new 3D holographic imagery technology.

According to a statement from the companies, this kind of imagery technology was impossible just two years ago. The companies designed a “waveguide combiner” capable of accurately displaying simultaneous variable-depth 3D content inside the user’s environment.

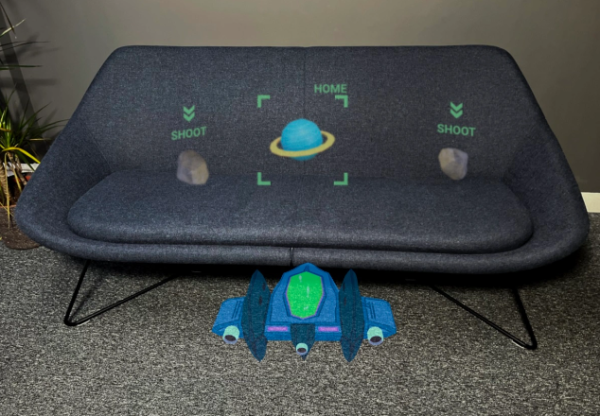

This technology will enable users to immerse themselves in immersive Augmented Reality gaming experiences in which the digital content can be superimposed in their physical environment and they are able to interact with it in a natural and comfortable way. The 3D holographic imagery technology can be used in wearable devices such as in smartglasses and augmented reality headsets.

VividQ and Dispelix also announced that they were forming a commercial partnership that will see them develop the technology and make it ready for mass production. This will give the headset makers the ability to launch their AR product roadmaps immediately.

The current augmented reality experiences offered by the likes of Magic Leap, Vuzix, Microsoft HoloLens, and other AR hardware makers deliver 2D stereoscopic images but at fixed focal distances or at one focal distance at a time. This creates eye fatigue and nausea during use and doesn’t provide ideal immersive three-dimensional experiences. For instance, objects don’t interact naturally at arm’s length and the objects aren’t exactly placed within the physical environment.

To provide the immersive experiences that will be needed for Augmented Reality to attain mainstream adoption, there will be a need for a sufficient field of view. It should also be possible to focus 3D images at the full range of natural distances ranging from 10cm to optical infinity just as we view physical objects naturally.

A waveguide combiner is currently the preferred method in the industry for the display of augmented reality images inside a compact form factor. This next-gen waveguide technology, along with the accompanying software has been optimized for 3D applications such as gaming, allowing consumer brands to unlock the potential of the market.

Waveguides, which are also referred to as ‘waveguide combiners’ or ‘combiners’ enable lightweight and conventional-looking front face for augmented reality headsets and will be crucial to the mainstream adoption of the technology.

Waveguides not only give form factor advantages but they also allow for a process known as pupil duplication which allows them to take images from small display panels (‘eyebox’) and make the larger by creating a grid of copies of that small image in front of the viewer’s eye. This is like periscope but with multiple views instead of a single view. This is crucial for ergonomic augmented reality wearables that are also easy to use.

Smaller eyeboxes are extremely difficult to align with the user’s pupil making the eye easily “fall off” the image due to the incorrect alignment. This needs the headset to be fitted to the user precisely as variations in the Inter-Pupillary Distance (IPD) will prevent the eye from being precisely aligned with the eyebox, thus not being able to see the virtual image.

Fundamentally, there is a tradeoff between the image size (“eyebox” or “exit pupil”) and the Field of View (FOV) of a display thus, this replication enables the optical designer to design a very small eyebox by using the replication process to provide a big effective image to the viewer while simultaneously maximizing the FOV.

VividQ CEO Darran Mi Ine said that although considerable investments and research had been made in the technology which is capable of creating augmented reality experiences, these efforts still fell short as they were not living up to the most basic of user expectations. The fundamental issue the industry has always faced, he said, was the complexity involved in displaying 3D images that are placed in the world with a field of view that is fairly decent as well as an eyebox that is large enough that it can accommodate wide-ranging IPDs, all of this accommodated inside a lightweight lens.

The VividQ boss says the two companies have solved the problem by designing something which can be manufactured and that they have tested and proven it while also creating the manufacturing partnership required to mass produce the technology. The companies say this technology is a breakthrough as AR can’t be delivered without 3D holography. According to the companies, their partnership has created a window through which users will be able to experience both real and digital worlds “in one place.”

VividQ’s 3D waveguide combiner, whose patent is still pending, has been built to work with the company’s software. Both the combiner and the software are licensable by wearable manufacturers so as to develop a wearable product roadmap.

VividQ’s holographic display software is compatible with standard games engines such as Unreal Engine and Unity, allowing games developers to easily create new experiences. The 3D waveguide can be built and supplied at scale via Dispelix, a Finland-based manufacturer of see-through waveguides for wearables and VividQ’s manufacturing partner.

Wearable Augmented Reality (AR) devices offer great potential in applications like gaming and professional use. Dispelix CEO Antti Sunnari says content ought to be true 3D in scenarios where users will be immersed in the experience for an extended duration of time. Good immersion also minimizes motion sickness and fatigue in the immersive experience.

VividQ has done a demonstration of its software and 3D waveguide technology at its headquarters in Cambridge, UK for consumer tech brands and device manufacturers that it is partnering with to make the next generation of Augmented Reality wearables. With such breakthroughs in AR optics, 3D holographic gaming will soon become a reality.

The technology that the two companies have created was once regarded as “quasi-impossible” as recently as 2021.

The current waveguide combiners work on the principle that the coming light rays are parallel as they need the light to bounce around in the structure and follow paths with similar lengths. If diverging rays (a 3D image) were to be inserted, all the light paths would be different based on the input 3D image that the ray came from.

This is problematic as it implies all the extracted light traveled different distances. It has the effect of a user seeing several partially overlapping copies of the input image all appearing at random distances. This means it cannot be put into an application. The new 3D waveguide combiner can adapt to diverging rays and correctly display images of the couch.

VividQ’s 3D waveguide consists of two elements. There is the modification of the standard pupil replicating waveguide design. The second one is an algorithm computing a hologram and correcting for distortion caused by the waveguide. Both the hardware and software components work seamlessly with one another such that the VividQ waveguide can’t be used with another software or system.

VividQ has so far raised an investment of $23 million with the funding coming from deep tech companies drawn from various countries including Austria, Germany, UK, Japan, and Silicon Valley.

https://virtualrealitytimes.com/2023/01/18/holograpgic-display-maker-vividq-partners-with-waveguide-maker-dispelix-for-new-3d-holographic-imagery-technology/https://virtualrealitytimes.com/wp-content/uploads/2023/01/A-simulation-game-where-the-user-is-interacting-with-the-digital-world-600x415.pnghttps://virtualrealitytimes.com/wp-content/uploads/2023/01/A-simulation-game-where-the-user-is-interacting-with-the-digital-world-150x90.pngTechnologyHolographic display technology manufacturer VividQ has partnered with the waveguide designer Dispelix to develop a new 3D holographic imagery technology. According to a statement from the companies, this kind of imagery technology was impossible just two years ago. The companies designed a “waveguide combiner” capable of accurately displaying simultaneous variable-depth...Rob GrantRob Grant[email protected]AuthorVirtual Reality Times - Metaverse & VR