Hot3D Dataset: New Meta Reality Labs AI Research Could Enhance the Capabilities of Quest Headsets

Despite advancements in XR hardware and software development, these devices still fall short when it comes to understanding the interactions between humans and objects.

Machine learning understanding of human-object interactions isn’t flawless due to obstacles like hand occlusions. Hands also move in a very complex manner and it will take time for machines to fully understand and accurately translate these interactions. Meta Reality Labs hopes its AI research will resolve some of these challenges and unlock new exciting applications for these devices.

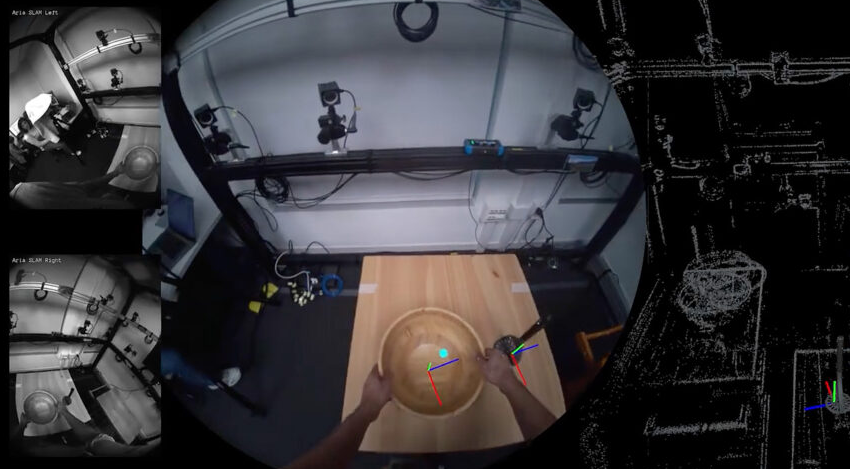

Meta Reality Labs researchers recently released a dataset dubbed HOT3D (“HOT” is an acronym for “Hand and Object Tracking”). This new egocentric dataset for 3D and object tracking has been designed to assist in developing a vision-based system capable of better understanding complex hand-object interactions, thereby opening up the possibility of new applications.

According to the researchers, a system like this could help transfer skills between users by initially capturing a sequence of hand-object interactions of an expert user such as in a tennis serve or furniture assembly. The captured information can subsequently be used to guide learners or users with lesser experience.

The system could also be used in robot training, helping transfer skills from humans to robots. This will enable autonomous robots to learn new skills with relative ease.

The researchers say the system could also make AI assistants better understand the context within which a user’s action occurs, or enable AR/VR users to have new input capabilities such as transforming a random physical surface into a virtual keyboard or a pencil into a multifunctional virtual magic wand.

You can find the dataset on Meta’s HOT3D project page. Follow this link to read the research paper.

Dataset Recorded With and For the Quest Platform

According to the research paper, the HOT3D dataset covers 800 minutes of egocentric video recordings and includes interactions with 33 everyday projects. These will include simple scenarios that involve picking up, looking at, or putting down objects. Also included in the dataset are typical actions that users undertake in the office, living room, and kitchen environments.

Meta Reality Labs captured the video data using Meta’s Project Aria research glasses and the company’s new Quest 3 VR headset. Using these devices in the data capture means that Meta is likely to use this dataset in training the AI systems for its existing and upcoming XR devices.

https://virtualrealitytimes.com/2024/07/03/hot3d-dataset-new-meta-reality-labs-ai-research-could-enhance-the-capabilities-of-quest-headsets/https://virtualrealitytimes.com/wp-content/uploads/2024/07/new-meta-reality-labs-ai-research-600x331.pnghttps://virtualrealitytimes.com/wp-content/uploads/2024/07/new-meta-reality-labs-ai-research-150x90.pngComputingTechnologyDespite advancements in XR hardware and software development, these devices still fall short when it comes to understanding the interactions between humans and objects. Machine learning understanding of human-object interactions isn’t flawless due to obstacles like hand occlusions. Hands also move in a very complex manner and it will take...Sam OchanjiSam Ochanji[email protected]EditorVirtual Reality Times - Metaverse & VR