Meta Demonstrates Impressive Full Body Tracking with Just the Quest Headset

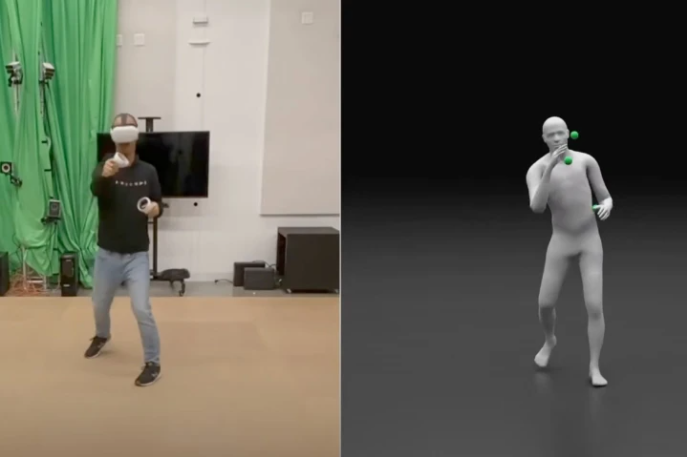

Most virtual reality systems are currently tracked either by hand or the head. This could soon change thanks to Meta’s innovations in avatar rendering that leverage machine learning and sensor data from Quest headset to display the user’s full body including their arms, torso, legs, and head. This results in a very realistic and accurate rendering of a person’s movements and poses when they are wearing the Quest 2 headset.

What’s marvelous about this is that realistic full-body tracking is achieved purely based on the sensor data coming from the headset and controllers.

The leg motion and position are estimated by using just the positions and orientations of the virtual reality headset and its two controllers. This system doesn’t require tracking bands between the legs or even external cameras. The tracking system was shared by Market Research Scientist Alexander Winkler who posted a number of videos on Twitter and links to a scientific paper published on arXiv. He also shared a link to a more detailed YouTube video on the demonstration.

This paper shows some of our research at Meta Reality Labs in reconstructing a user's pose from only the sensors of the Quest headset using Reinforcement Learning.

authors: with Jungdam Won and Yuting Ye

paper: https://t.co/hpZhuY8PC1

video: https://t.co/BBRGMoHt4t

1/4👇 pic.twitter.com/EiwQ54R5th— Alexander Winkler (@awinkler_) September 22, 2022

This is not something new. Meta has previously shown that Artificial Intelligence can be a foundational technology for virtual reality and augmented reality with a hand tracking application for Quest. This entailed training a neural network over many hours of hand tracking movements to create a fairly accurate and robust hand tracking even with the low-resolution cameras in the Quest headset that aren’t optimized for hand tracking.

This has been made possible by the predictive aspect of AI which leverages prior information acquired during training with numerous inputs. Because of this, a few inputs from the real world will suffice in creating an accurate translation of a user’s hands into the virtual world. However, reproducing a full real-time acquisition and rendering would need a lot more computing power.

Meta’s latest tracking project transfers lessons learned from AI-powered hand tracking into full body tracking. This involves reproducing the most plausible and physically accurate simulation of the virtual body movements derived from the real movements through the Artificial Intelligence training of previously collected data to the entire body. A full-body avatar can be realistically animated by QuestSim by leveraging just the sensor data from the two controllers and the headset.

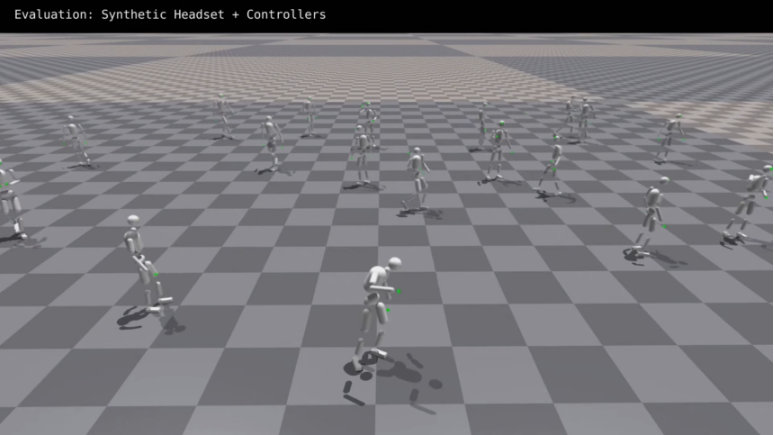

The QuestSim was trained with AI using sensor data that was artificially generated. To realize this, the researchers simulated headset and controller movements according to eight hours of motion capture clips of 172 people. This ensured the team could AI-train the QuestSim without capturing headset and controller data with body movements from scratch.

The motion-capture clips featured 130 minutes of walking, 110 minutes of jogging, 80 minutes of casual conversation including gestures, 70 minutes of balancing, and 90 minutes of whiteboard discussion. The simulation training of the avatars using reinforcement learning went on for about two days.

This process is referred to as motion tracking from sparse sensors. Machine learning is quite effective in extracting meaningful information from very sparse data where there are sufficient dependencies between the known and unknown.

Because people swing their arms when they are walking or running, arm movements, therefore, provide a fairly good indicator of what a person’s legs are doing. When this information is coupled with the head tilt and direction, a machine learning system can, thus, accurately predict most human motion.

A more conventional approach to tracking the limbs would have required extra hardware like reflective markers that are placed on a wearer’s legs and torso and which are identified by external cameras, or the use of bands containing wireless beacons that are worn in various parts of the legs that transmit both motion and position data.

The conventional approach works but the required hardware is usually sold as an accessory and not only costs more but is also not supported in most games and apps. If Meta is able to obtain good results from full-body tracking simulation using the Quest headset, then developers will likely be able to also integrate body tracking into their games and apps.

Although this is a major advance, Meta Reality Labs is cautious and has admitted that the system still needs more work. If a user moves fast enough, the Machine Learning model fails to accurately identify their pose. It is also difficult for the computer to estimate unusual stances. The same applies when there are obstacles in the virtual environment that are not there in reality which causes movements not to match.

The overall effect is still relatively ok. However, it would be great to have some upgrades that would make it possible to see the full body rather than merely floating torsos when interacting with friends in virtual reality.

It is not clear if this technology will be launched soon. Meta’s Quest Pro headset is set to be announced in three weeks so it is perhaps not a coincidence that Meta’s full-body avatars are being unveiled at this time given that the Quest Pro headset has the computing power and specifications to render these avatars much better than the current Quest 2 headset.

The Meta Quest Pro has both eye-tracking and face-tracking functionalities and can offer users an enhanced sense of presence with those they are interacting with in virtual reality.

https://virtualrealitytimes.com/2022/09/23/meta-demonstrates-impressive-full-body-tracking-with-just-the-quest-headset/https://virtualrealitytimes.com/wp-content/uploads/2022/09/QuestSim-600x399.pnghttps://virtualrealitytimes.com/wp-content/uploads/2022/09/QuestSim-150x90.pngHardwareOculus Quest 2TechnologyVR HeadsetsMost virtual reality systems are currently tracked either by hand or the head. This could soon change thanks to Meta’s innovations in avatar rendering that leverage machine learning and sensor data from Quest headset to display the user’s full body including their arms, torso, legs, and head. This results...Rob GrantRob Grant[email protected]AuthorVirtual Reality Times - Metaverse & VR