Oculus Rift CV2 Specs

Earlier I published an article predicting the BUST that comes after the BOOM in virtual reality, because of unrealistic expectations created by tech-pushing, click bait journalism.

One of the ways out of the problem is for Oculus to release a CV2 that’s more in line with what we all actually want. We all know the Rift is still a child’s toy compared to what’s coming in a decade, and we fans are definitely impatient. So, here I will make a list of what I think is needed, and why, to make VR truly breathtaking and ready for a killer app that captivates the masses and gets them to purchase a device for their homes. Without this, there will not be enough money in the system to sustain or justify all the billions of dollars going into investments in virtual reality.

Unfortunately, Oculus has recently made it clear that they aren’t going to be releasing a new headset anytime soon, so we’ll be waiting another 2-3 years or so, putting us into 2019 at the earliest. To me it’s a strange plan because we’ll certainly see a lot of better headsets being released between now and then, capturing market share, and most importantly, the interest of VR fans. However, the upside is that CV2 will be an order of magnitude better when it comes to immersion, at least on the visual aspect. The haptic part is still decades away.

So below we discuss some of what we see coming for CV2:

Better Resolution: 4K Per Eye

The most obvious improvement that everyone wants is better resolution, in order to improve realism and remove the Screen Door Effect (SDE). Palmer Luckey has said that things get really good at 8K resolution, but that could realistically be 10 years off. Meanwhile, 4K would be a vast improvement over what we have now.

-

Current Resolution of Rift and Vive: 2,592,000 pixels (2160 x 1200)

4K Per Eye Would be: 16,588,800 pixels (3840 x 2160 per eye)

So, 4K per eye resolution would be 6.4 times better than what it is now.

Since you can already buy a 4K cell phone, it’s natural to wonder why they don’t just use two of these screens and “voila”, 4K per eye? The answer is that these 4K screens aren’t optimized for VR. They can’t run at the 90fps necessary for good VR, and they don’t have enough contrast. A discussion about this is here on Reddit.

The other limiting factor is the ability to process all that data and get it onto those screens. The best and newest graphics cards are designed to run the Oculus and Vive well with the current resolution. In order to process more data and more pixels, much better graphics cards could be necessary, and that could take several years.

However, we may not have to wait for 2-3 more generations of graphics cards, because one factor that may improve this is VAR (Variable Acuity Resolution), also known as foveated rendering, described in the next section.

VAR: Variable Acuity Resolution or Foveated Rendering

VAR: Variable Acuity Resolution or Foveated Rendering

As just mentioned above, VAR is absolutely essential to bring into the next Rift. This is a software technology that can vastly speed up the processing of 3D data, so that a much larger resolution can be rendered. We have a detailed explanation of it here: VAR / Foveated Rendering

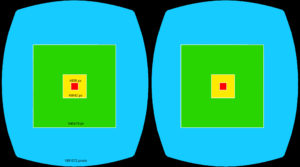

It requires a camera inside the headset that tracks the precise location of the user’s eyes, and renders much higher detail only right where he or she is actually looking. I’m not sure how much it can reduce the number of calculations required to render a 3D scene, but it should at least be good enough to get us to 4K Per Eye with today’s graphics cards that run the Rift, such as the GeForce GTX 980ti.

The Fove headset is already working on this, and we should expect Oculus and Vive to do it too. They certainly know about the idea, since it was reported in 2012 as part of early Oculus Rift specs (http://www.roadtovr.com/early-oculus-rift-specifications-and-official-site-confirms-500-target/)

Tracking the user’s eyes have other benefits as well, such as using it for targeting in games, or a GUI that allows a person to make selections or drop down a menu simply by looking at it for a specific length of time.

Holographic or Lightfield Displays

Oculus’s screen currently is a flat one that fools your eyes into thinking you’re looking at 3D. But actually, everything is in focus at once, and your eyes and brain know the difference, at least subconsciously enough to lower immersion, and for some people causes headaches and eye strain.

Two types of new screen technology under development solve this problem to produce light that’s actually more like the photons that are actually bouncing off real objects. One of those is lightfield displays, such as those being hucked by Magic Leap, and the other is “holographic”, such as described here on Tech Radar: http://www.techradar.com/news/next-gen-oculus-rift-headsets-could-focus-on-holographic-displays

One of the reasons that Oculus is probably going to wait so long is that they want one of these screens in CV2, and they are probably 2-3 years away.

Other Optical Improvements – Get Rid of the Fresnel Lens Glare

Palmer Luckey himself said it best in 2013, “they kill contrast, add a variety of annoying artifacts, and don’t actually save all that much weight.” (From this Reddit post). Yet, it turned out that they had enough advantages that they were put into the CV1.

Luckey is a purist when it comes to trying to improve immersion, and I’m sure he hates the “god rays” and other issues that are part of the CV1 due to the fresnel lens compromise that was made. I’m willing to bet he and the Oculus team are trying their best to solve this for their next headset. Hopefully they do because it’s really annoying, especially in high-contrast images such as a white logo on a black screen.

Hand Tracking and Gesture Recognition: Nimble VR & Pebbles Interfaces

Hand Tracking and Gesture Recognition: Nimble VR & Pebbles Interfaces

We want to see our hands in VR! Oculus purchased Nimble, which already had this amazing technology two years ago, as shown here: Nimble Video. Since they seemed to already be so close, we should expect this technology in the next headset. A camera (or two) to watch your hands can also be used for other purposes such as detection of facial expressions.

Gesture recognition would be useful in many ways. For example, in games, other characters could recognize various hand signals, such as a “thumbs up”, or in social VR, your avatar should match your gestures, since so many people use their hands when conversing. Gestures can also be used as part of a GUI (Graphical User Interface), such as grabbing and moving things, opening menus, pushing buttons, etc.

The Oculus environment needs a standard for gesture recognition as part of the operating system, so that developers can easily use it for various controls and features in their software.

Mixed Reality and Intel’s RealSense

RealSense is a must-have new technology for VR (and even more so for AR) that should be included in the CV2. The technology will automatically map out the space you’re in, so you can avoid bumping into objects within the limited space that most people are using for VR. Microsoft and its partners are releasing headsets with this technology in 2017 and Oculus must keep up.

It can also be mixed with various games so that you can see and feel the objects in your room. Developers will be able to find endless uses for this mixed reality technology, greatly adding to immersion in VR.

This Wired article explains more about it: wired.co.uk/article/intel-realsense-virtual-reality-augmented-reality

Wireless

Ignoring the possiblity of health issues related to high-throughput electromagnetic wave generators strapped around your brain, it’s obvious that the public is going to want next-gen headsets that they aren’t tripping on, or sweeping coffee cups and stuff off their desk from the fat cable that currently goes from the Vive or Rift to their computer. It’s been reported that the Vive is working on this with startup Quark, and we should expect that Oculus is doing it too.

Better Developers Tools

Probably the best tool for developing VR apps with the most life-like result, is UE4, Unreal Engine 4. Yet, it’s nearly impossible to use for anyone but 3D gaming geniuses. You basically need a 4-Year degree in studying nothing but UE4 to be able to make anything good with it. Animation usually must be done with another program. Importing characters is a nightmare. The resulting file sizes are ridiculously huge, carrying with them a huge percentage of unnecessary and unused code and junk.

Most UE4 games that look pretty good on a 2D screen look like crap in a VR environment, because so many of the standard tricks for creating a false impression of detail or shadow no longer work in VR because we humans are so attuned to our reality. For every UE4 savant that can currently make a great product in VR, there are 10,000 other artists, creators, and dreamers that have amazing ideas but are unable to use the current tools to make something like what they can imagine.

Easily creating awesome 3D scenes and characters, and bringing them to life, should no longer be a secret language only for super nerds. It needs to be made available to the rest of us. Therefore, Oculus should be focused on making new tools that make it far easier for ordinary people to create stuff in VR that don’t require a lifetime of coding experience, a team of geeks, and millions of dollars.

This concept isn’t part of the headset itself, but a necessary component of the VR environment that will make the Oculus appeal to a much larger audience.

VR LAN Parties

VR will really not be cool until you can take your headset and laptop over to a friend’s house, plug in to his or her network, and see each other within another world. While this is probably already possible with Minecraft, it can be difficult to set up, and it’s glitchy.

Oculus and Vive need to create a standardized tool to make it easy for friends to connect like this, regardless of what headset they own, so they can create art, build a virtual house, or fight dragons and zombies together. And to make it interesting, they sure need to be able to see each other’s facial expressions.

Real Time Expression Recognition

Real Time Expression Recognition

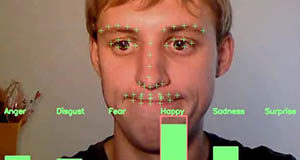

The headset needs, in addition to the IR camera it already has, another parallel one to watch the user’s facial expression. Because the user’s eyes are covered up, another two smaller ones inside the headset can watch the eyes, since they are also crucial to detecting emotions such as happiness. Cameras inside the headset are already going to be needed for eye-tracking, (VAR), as noted above. Expression and eye tracking can also be used as a form of lie-detection, which could be useful for security. This article has some interesting information about reading seven universal “microexpressions”: scienceofpeople.com/2013/09/guide-reading-microexpressions/.

Why does VR need expression recognition? The answer is that the “multiverse”, considered to be one of VR’s most important goals and killer apps, is incredibly bland without the avatars being able to have real time expressions that mimic their meatspace counterparts. If you’ve ever tried Second Life and experienced what a “conversation” is like, it’s pretty ridiculous because you don’t bother to look at each other, since there’s no emotional interaction between people.

This technology is readily available, as shown by this article that lists 20 different companies selling an emotion recognition product. The real challenge is to make it work while someone has half their faced covered, hence the need for multiple cameras.

My guess is that we won’t get this feature until CV3, but it’s certainly something that’s absolutely essential to creating “presence” so that VR will go mainstream.

Price Prediction and Summary

Many people are hoping and expecting the price of VR headsets to go down, but with all the new technology needed to keep improving virtual reality, that’s very unlikely to happen. New cameras, bigger, faster screens, lots of new software, and millions in R&D are going to probably keep the prices about where they are.

The geniuses behind Oculus, such as Luckey, Carmack, and Zuckerberg, are already billionaires and so they’re not in this to make more money. They have a long-term vision for virtual reality that requires a tremendous number of new technologies to be imagined and created, which will take at least a decade just to get the visual part of VR close to done. The TOUCH aspect of VR, of course, is still many decades away, and will require technologies that are at this point the realm of pure science fiction.

https://virtualrealitytimes.com/2017/02/16/oculus-rift-cv2-specs/https://virtualrealitytimes.com/wp-content/uploads/2016/09/Oculus-CV2-Mockup-600x439.jpghttps://virtualrealitytimes.com/wp-content/uploads/2016/09/Oculus-CV2-Mockup-150x90.jpgEditorialOculus RiftEarlier I published an article predicting the BUST that comes after the BOOM in virtual reality, because of unrealistic expectations created by tech-pushing, click bait journalism. One of the ways out of the problem is for Oculus to release a CV2 that's more in line with what we all actually...Geoff McCabeGeoff McCabe[email protected]AdministratorVirtual Reality Times - Metaverse & VR