PHRTF Sound: Far better than 3D Sound for VR

During the annual presentation at Oculus Connect, Michael Abrash, Chief Scientist at Oculus, talked about the ultimate audio solution for VR, PHRTF (Personalized Head-Related Transfer Functions), and he claims it will outperform current 3D audio technology. VR audio or 3D audio is often referred as spatialization, which basically is the ability to play a sound as if the sound is positioned in a particular place in a 3D space. VR audio is possible thanks to HRTF, which can be registered with existing technology, but every person has a different HRTF.

VR Audio and Personalized HRTFs

To better understand this term let us know first what HRTF is according to Griffin D. Romigh, Ph.D. in Electrical and Computer Engineering:

Head-related transfer functions represent and describe the acoustic transformations caused by interactions of sound with a listener’s head, shoulders, and outer ears which give a sound its directional characteristics. Once this transformation is measured, it can be combined with any non-directional sound to give a listener the perceptual illusion that the sound originated from a designated location in space.

So what this basically means is that developers need machines to measure our heads and ears to accurately replicate the sounds we hear so we can determine where they come from in a virtual reality environment just like we do in real life. So, why do we need to make this personal? Simply because we all have different heads and bodies. It is possible, although expensive, to measure a large set of HRTFs from different people, and make a referential set based on that. But to record everyone’s HRTFs would be unfeasible with current technology. HRTFs are registered in unique, special rooms and it takes from 1-2 hours per person to make the registry.

So Abrash is right when he says we won’t see PHRTF in the next 5 years, but we will definitely see improvements in HRTF related technology.

“Accurate audio is arguably even more complex than visual improvement due to the discernible effect of the speed of sound; despite these impressive advancements, real-time virtual sound propagation will likely remain simplified well beyond five years, as it is so computationally expensive.”

The main challenge for making PHRTFs possible is to overcome the difficulty and high cost of recording each HRTF. This technology is essential to improve virtual reality audio, it provides a strong sense of immersion because sounds give us important clues that suggest where we are in a real three-dimensional environment.

Just like localization, VR audio (or spatialization) depends on two main components that explain by themselves why is not so easy to develop realistic VR audio: direction and distance:

Directional Spatialization with HRTFs

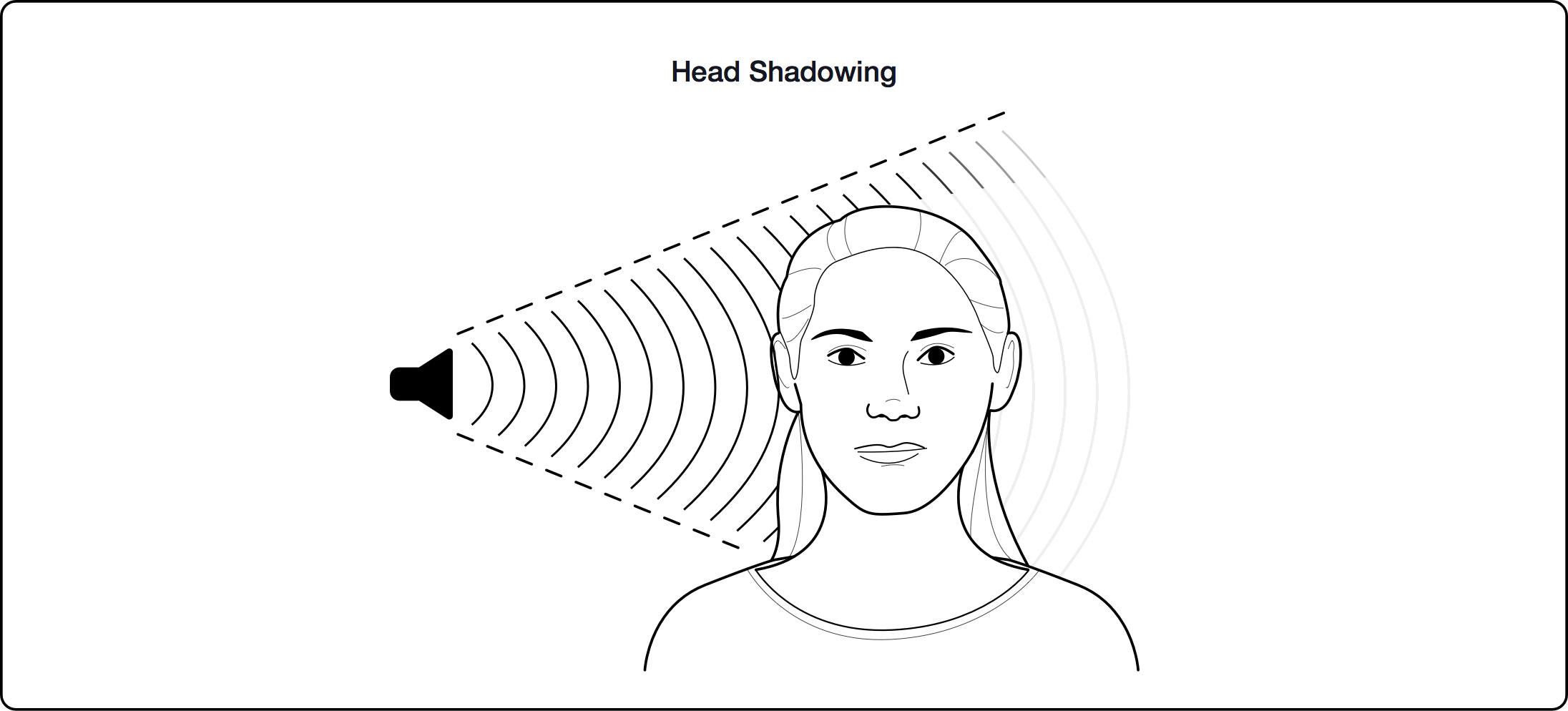

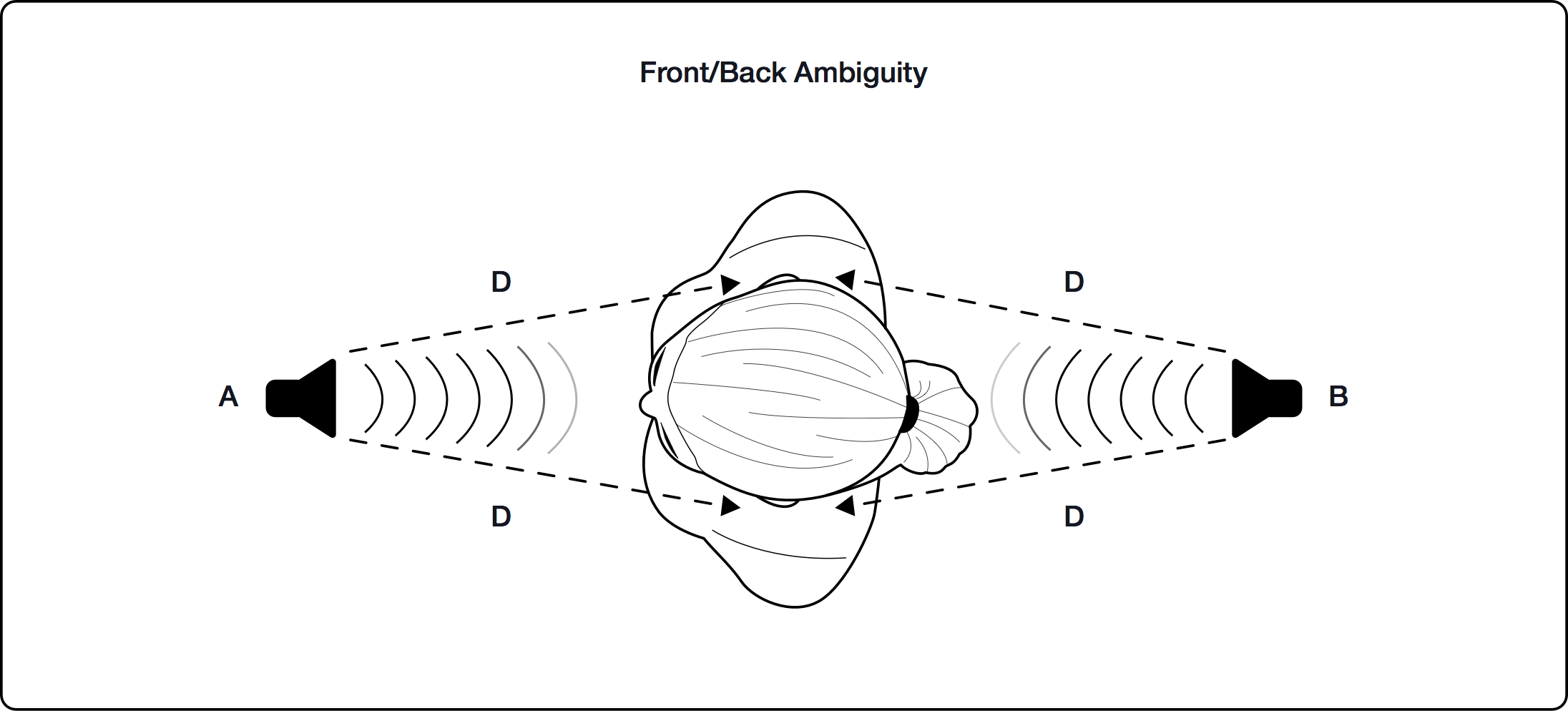

Sounds are transformed by our ear geometry and body differently depending on the direction where they come from. Head-Related Transfer Functions (HRTFs) bases itself on these different effects, and they are used to localize sounds.

How to Capture HRTF?

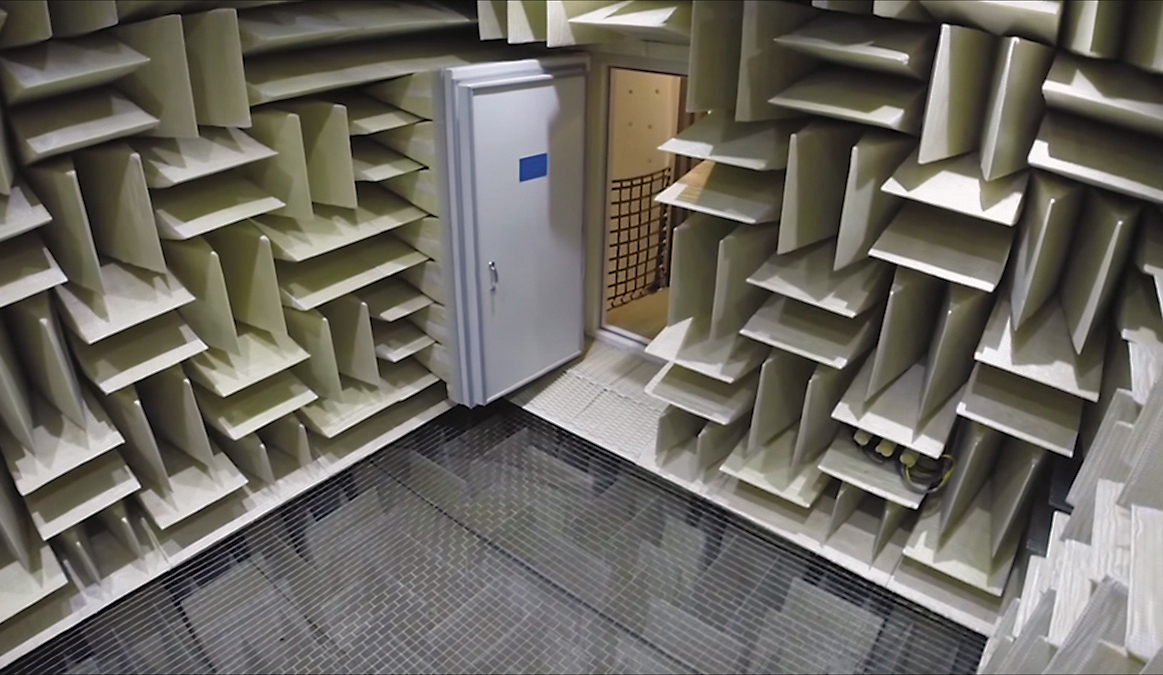

HRTF are captured by placing people in an anechoic chamber, put microphones in their ears, and play sounds in the chamber from every possible direction to record those sounds with the microphones. Then the captured sounds are compared with the original sounds to compute the HRTF that would take the listener from one to the other. This is made for both ears and usable samples sets require sounds to be captured from a considerable number of discrete directions.

Of course, not every person has the same physical characteristics, and it would be impossible to record everyone’s HRTF, so labs like Microsoft Research and Oculus use a generic reference set that fits most situations, particularly when combined with head-tracking.

We can assume that Microsoft’s new headset will use Intel’s RealSense cameras to create a 3D map of every user’s ears this to create their PHRTF. In this case, during the installation process, users would point the cameras located in the headset at their ears, one ear at a time, to scan and save the PHRTF. Then the headset would use this data to provide VR apps, content, or videogames with the exact shape and size of your head and ears to appropriately adjust the 3D sounds.

Applying HRTFs

Once the HRTF set is ready, developers can select an appropriate HRTF and apply it to the sound if they know the direction they want the sound to appear or come from. This is made in the form of what tech companies call ‘time-domain convolution’ or an ‘FFT/IFFT pair’. So companies basically filter audio signals to make them sound like they come from a particular direction. Put into a few words sounds pretty easy, but it is actually costly and difficult to develop.

Besides, the use of headphones is essential because an array of speakers would unnecessarily complicate things further.

Head Tracking

People use head motion to identify and locate sounds in space. Without this, our ability to locate sounds in a three-dimensional space would be considerably reduced. When people turn their head to one side, developers must make sure they are able to reflect that movement in their auditory senses, otherwise, the sounds will seem false and not immersive enough.

High-end headsets are already able to track people’s head orientation and even position in some cases. So if developers provide head orientation information to a sound package, they will be able to project immersive sounds in people’s space.

Distance Modeling

HRTF can help companies identify sounds’ direction, but not the distance of those sounds. There are several factors that humans use to determine or assume the distance of a sound source and these can be simulated with software:

Loudness

This is perhaps the easiest one and our most reliable cue when we hear a sound. Developers can simply attenuate the sound based on the distance between the listener and the source.

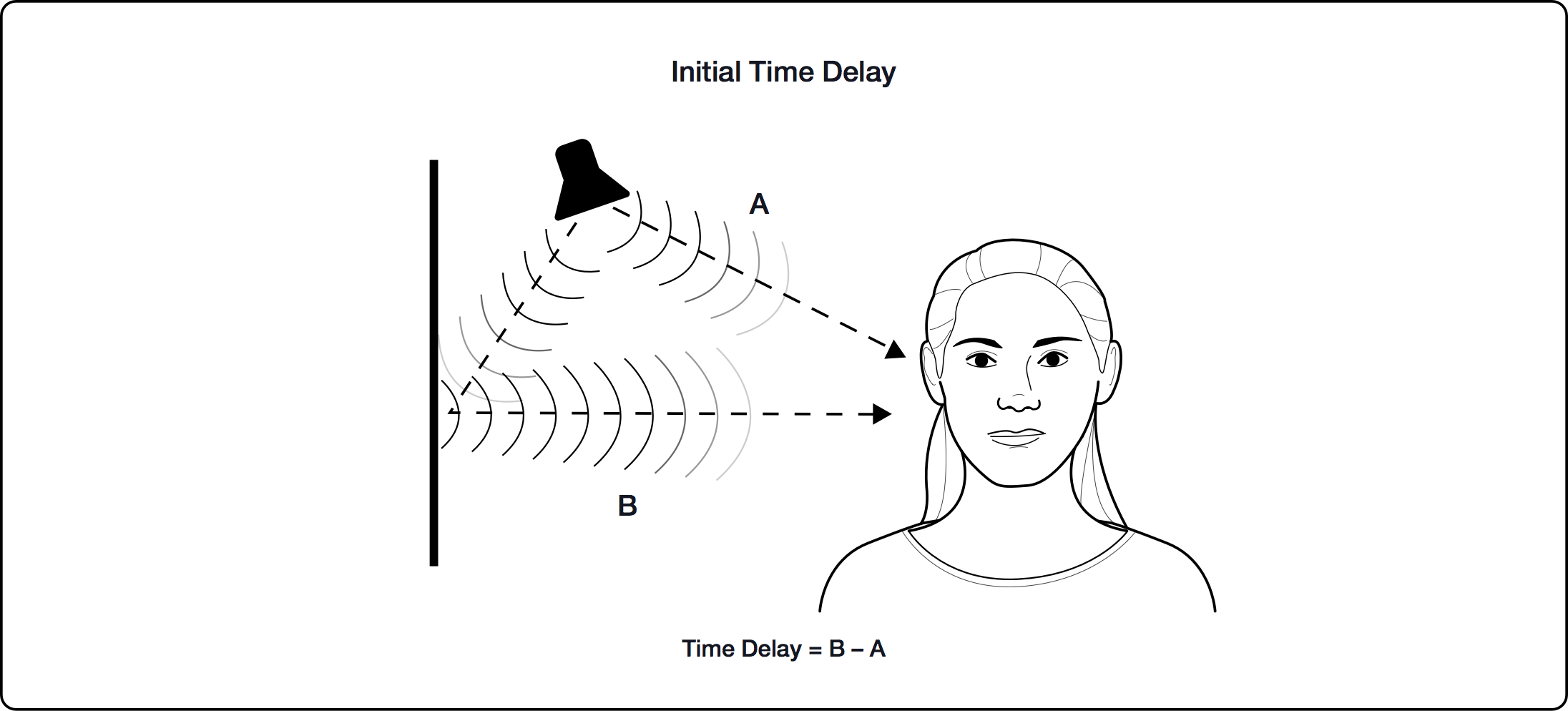

Initial Time Delay

This is much harder to replicate, it requires to compute the early reflections for a given set of geometry and its characteristics. It is also very expensive and architecturally complicated.

Direct vs. Reverberant Sound

Also known as the wet/dry mix, it is the result of any system that intends to model late reverberations and reflections in an accurate way. These systems are usually very expensive.

Motion Parallax

This one is a byproduct of the velocity of a sound source.

High Frequency Attenuation

HF attenuation due to air absorption is a trivial effect, but it is quite easy to model by using a simple low-pass filter, and also by adjusting slope and cutoff frequency. High-frequency attenuation is not as important as the other distance cues but we can’t ignore it.

For more information check Oculus developer’s website.

https://virtualrealitytimes.com/2017/03/13/phrtf-sound-far-better-than-3d-sound-for-vr/https://virtualrealitytimes.com/wp-content/uploads/2016/11/sound-02-anechoic-chamber-600x375.jpghttps://virtualrealitytimes.com/wp-content/uploads/2016/11/sound-02-anechoic-chamber-150x90.jpgTechnology DiscussionDuring the annual presentation at Oculus Connect, Michael Abrash, Chief Scientist at Oculus, talked about the ultimate audio solution for VR, PHRTF (Personalized Head-Related Transfer Functions), and he claims it will outperform current 3D audio technology. VR audio or 3D audio is often referred as spatialization, which basically is the ability...Pierre PitaPierre Pita[email protected]SubscriberTrue gamer and very passionate about gadgets and new technologies. Virtual Reality is the future and geeks like us are ruling the World.Virtual Reality Times - Metaverse & VR