Google’s AI Hand-Tracking Algorithm Could Be a Major Step to Sign Language Recognition

Sign language is used by millions of people around the world but few who are not deaf are able to understand the hand gestures that constitute sign language. For quite some time, researchers have been trying to develop technologies that could understand sign language and automatically translate these hand gestures into a human-understandable language. Some tech companies such as Kintrans and SignAll have come up with hand-tracking software that could potentially enable millions or billions of people to communicate in sign language but the efforts have not been successful so far in terms of the accuracy of the translation.

Google is reporting that it has hit something of a breakthrough by developing a new hand-tracking algorithm that is capable of tracking hand gestures in real time. The new intelligent AI-based system leverages machine learning to generate a hand map. This map can be created with help of just a single camera allowing for an easier implementation.

Efforts to track hand gestures have hit a snag so far due to the difficulty in tracking the quick hand movements accurately. Google’s new AI research addresses this complexity. However, the Google labs still have a very limited set of data that has been processed by the algorithms.

How the Real-Time Hand-Tracking Works

Existing hand-tracking technologies or gestures are capable of translating the sign language by detecting both the size and position of the complete hand. In the Google research, the researchers have managed to get rid of the need to handle the rectangular shapes in various sizes. Google’s hand-tracking algorithm will simply recognize the palm which has a square shape and then a separate analysis process will be carried out for the fingers.

A Google blog post reports that the tech company introduced a new technique for hand and finger-tracking as well as gesture recognition. Three AI models were trained separately and designed to deliver a very robust system capable of fast hand and finger recognition in 3D. The system proved to be quite reliable even in cases where parts of the hand were hidden.

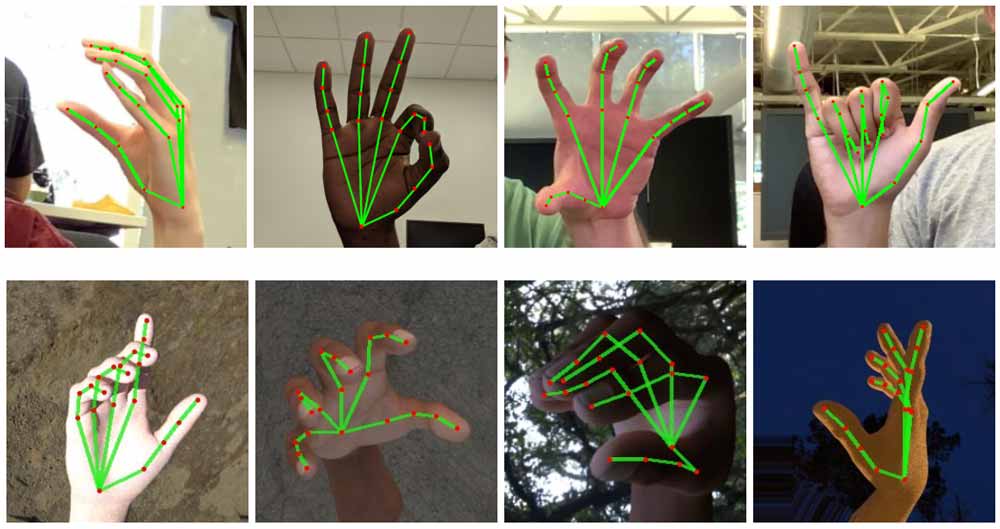

The system began by training on the person’s palm instead of taking the dimensions of the entire hand. A separate algorithm looked at the palm and the fingers and then assigned 21 trackable coordinates on both the palms and fingers including on the fingertips and knuckles.

The third AI model is in charge of the gesture recognition. This model has been AI-trained using approximately 30,000 annotated images of finger gestures derived from different cultures. The researchers added the 21 coordinates to the 30,000 images of hands in various poses as well as lighting situations.

Apart from the real recordings, the researchers also used a high-quality artificial hand model for the AI training which was rendered in different backgrounds. This was done to help enhance the robustness of the tracking.

The new tracking process will run even on smartphones. It is possible to run the pre-trained artificial intelligence models with Google’s smartphone-optimized AI playback software, Tensorflow Lite.

For the optical detection, the researchers used a conventional RGB camera which is available in the front and back covers of almost every smartphone.

The Google developers have open-sourced the software. The open source version of both the software and the demo are freely available on Github. Google hopes that other developers will find it, improve on it and creatively implement it in other use-cases that will stimulate new applications and research venues. The system will be utilizing Google’s Mediapipe augmented reality framework.

But sign language relies on more than just hand gestures. It is also based on facial expressions and other cues so it will be a long time before we have a flawless sign language recognition system that will accurately translate sign language into a human understandable in language across cultures.

https://virtualrealitytimes.com/2019/08/22/googles-ai-hand-tracking-algorithm-could-be-a-major-step-to-sign-language-recognition/https://virtualrealitytimes.com/wp-content/uploads/2019/08/Google-AI-hand-and-finger-tracking-600x317.jpghttps://virtualrealitytimes.com/wp-content/uploads/2019/08/Google-AI-hand-and-finger-tracking-150x90.jpgInventionsTechnologySign language is used by millions of people around the world but few who are not deaf are able to understand the hand gestures that constitute sign language. For quite some time, researchers have been trying to develop technologies that could understand sign language and automatically translate these hand...Sam OchanjiSam Ochanji[email protected]EditorVirtual Reality Times - Metaverse & VR